You can take advantage of all the software you have installed locally without configuring one or more remote clusters.

#INSTALL SPARK ON WINDOWS INSTALL#

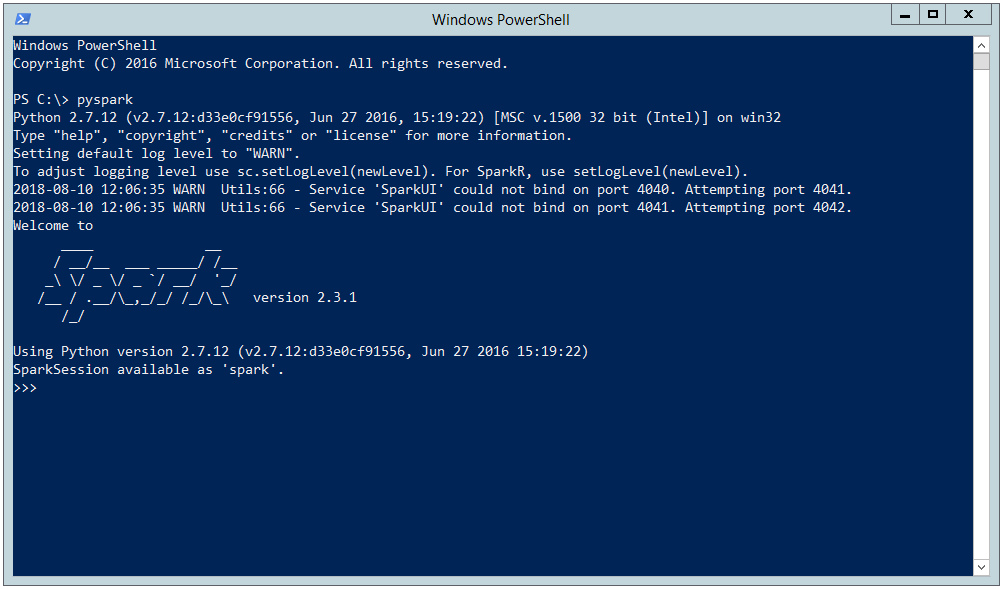

Double-click on the downloaded Spark installer file. Install Apache Spark in a Standalone Mode on Windows Last Updated : 15 Mar, 2021 Apache Spark is a lightning-fast unified analytics engine used for cluster computing for large data sets like BigData and Hadoop with the aim to run programs parallel across multiple nodes. Save the downloaded file to your computer. So here we go: Download the Spark installer file from the link above. Now let’s just move to the next section to share the steps you have to follow to download Spark for Windows PC.

#INSTALL SPARK ON WINDOWS HOW TO#

It may be easier to configure your own local development environment than it is to configure the Jupyter installation on the cluster. How to download and install Spark for Windows 10 PC/laptop.The dotnet command creates a new application of type console for you.

Spark is fully GDPR compliant, and to make everything as safe as possible, we encrypt all your data and rely on the secure cloud infrastructure provided by Google Cloud. dotnet new console -o MySparkApp cd MySparkApp. Spark is free for individual users, yet it makes money by offering Premium plans for teams. You only need a cluster to test your notebooks against, not to manually manage your notebooks or a development environment. In your command prompt or terminal, run the following commands to create a new console application. You can work with notebooks locally without even having a cluster up.You can also have a collaborative environment where multiple users can work with the same notebook. You can use GitHub to implement a source control system and have version control for the notebooks.With the notebooks available locally, you can connect to different Spark clusters based on your application requirement.Problem Formulation: Given a P圜harm project. For more information on how notebooks are stored on the cluster, see Where are Jupyter notebooks stored? The Spark framework is a distributed engine for set computations on large-scale data facilitating distributed data analytics and machine learning. To upload the notebooks to the cluster, you can either upload them using the Jupyter notebook that is running or the cluster, or save them to the /HdiNotebooks folder in the storage account associated with the cluster. Around 50 of developers are using Microsoft Windows environment. Even though Jupyter notebooks are already available on the Spark cluster in Azure HDInsight, installing Jupyter on your computer provides you the option to create your notebooks locally, test your application against a running cluster, and then upload the notebooks to the cluster. This article is for the Java developer who wants to learn Apache Spark but don't know much of Linux, Python, Scala, R, and Hadoop.There can be a number of reasons why you might want to install Jupyter on your computer and then connect it to an Apache Spark cluster on HDInsight.

0 kommentar(er)

0 kommentar(er)